Hello,

We have added Robosense helios lidar to B1. We didn’t found any information resources for the navigation for B1. How can we integrate this lidar with B1? Our goal is to enable the obstacle avoidance for B1. So far we are able to visualize the lidar data on RVIZ. We installed ROS melodic in the main pc of B1 and with the help of robosense lidar’s ros sdk we were able to visualize the data.

Can anyone please help us for obstacle avoidance in B1.

Dear @bishu,

Are you using the qre_b1 repo. It should have the required navigation files. You would just require to run the helios driver and replace the topic names in the b1_navigation package to enable obstacle avoidance in the navigation parameters file to make it run.

No we are not using any navigation repo now. Infact we don’t know if anything like that exist. We were just using robosense sdk for visualization for now but haven’t done anything to combine it with B1. The repo link you mention in your reply didn’t open.

email: khanalbishwatma123@gmail.com

github: bishwatma7

Dera @bishu

You have been added to our GitHub repository. Please accept the join request sent to you on your email linked to your GitHub account.

Hello,

Thank you for the repo. We changed the topic name in launch and include directories which were under b1_navigation.

But we are not able to see the map in rviz. We have try this gmapping launch file after we changed the topic name to our lidar topic.

It is complaining about the frame id, and saying that the tf data is missing. Lidar data is being published on this rslidar frame id, but the tf data does not get published on that frame id, so whenever we run the gmapping launch file this error shows up:

Terminal (gmapping): Imgur: The magic of the Internet

RViz: Imgur: The magic of the Internet

Tf data monitor: Imgur: The magic of the Internet

Also, we noticed your repo is for ROS noetic, but our robot has ROS melodic. Could that be a potential issue also?

Thanks for the help!

It seems the the URDF of the Helio in your B1 might not have the exact link name as to which is being published. Please check if there is a link name called rslidar in your B1 urdf.

In this case it is not!

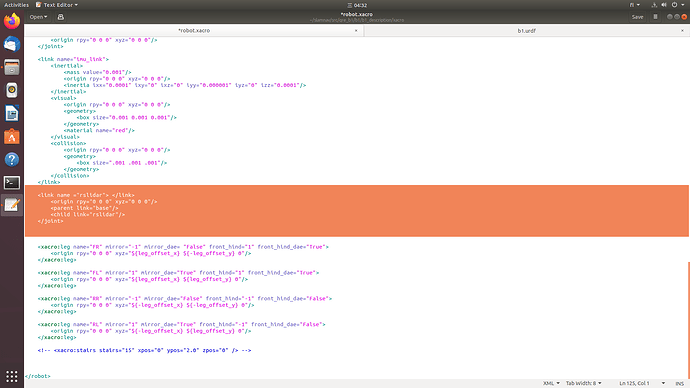

We didn’t have anything like like name configured in b1 urdf for helios lidar. After you mention about the link name, we added these things to b1.urdf and robot.xacro which were under config file of b1_description. We are still getting the same error. Could you please mention if this is what you meant to be edited or are there some other files which needed to be configured?

Dear @bishu,

You need to do the change only in the robot.xacro. The file in here as incorrect joint tags. The second image is correct though.

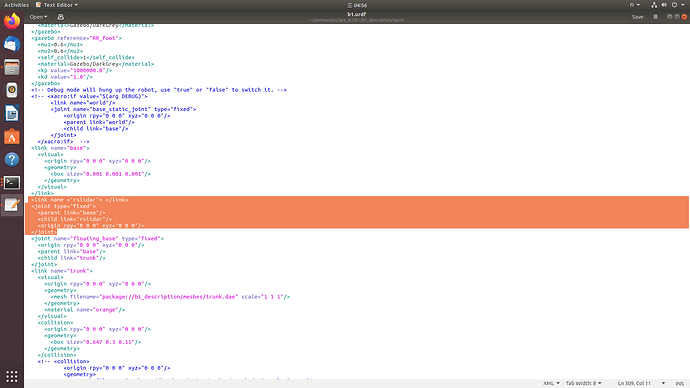

Another temporary way to test is by adding a static transform via cli:

rosrun tf2_ros static_transform_publisher 0 0 0 0 0 0 1 base_link rslidar

Hello Azib,

I didn’t find anything for the camera in this package. Could you please provide package for camera please.

Dera @bishu

Unfortunately, we do not have a camera package for the B1 as it is not provided by Unitree. Please visit this link to learn how you can access the camera stream.

Thankyou for the link. I have qre_b1 but not qre_go1. Could you please give me the acess in same github account which you gave me qre_b1 for.

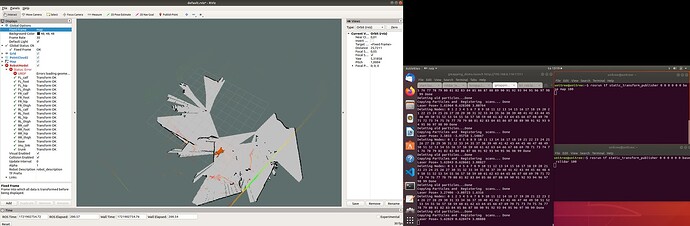

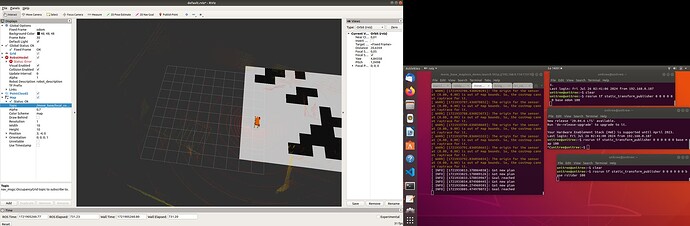

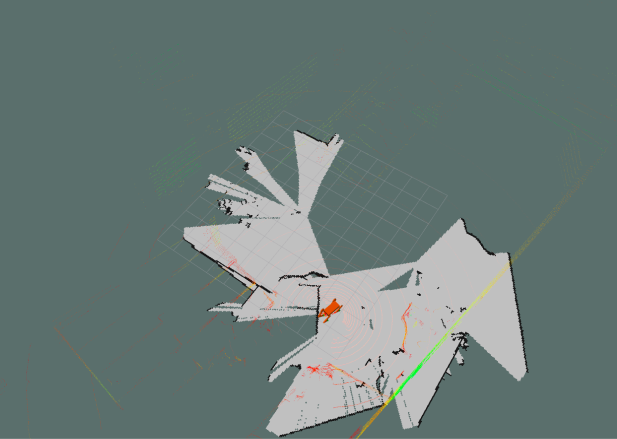

Hello, We have tried to publish different static publishers as it requires (by checking the error/warnings). For some reason, the map is not being generated properly, seems like robot is not able to localize itself properly. The position of the robot is fluctuating in RVIZ and when generating the map, it is overlapping the walls. When we move the robot using joystick to cover larger area for map, it suddenly crashes and detects itself in completely wrong position. What do you think might be the problem? Below i have attached the position of the lidar in B1.

it does detect the arm at its back but i thought it is not a problem if we send the navigation goals forward.

As you can see it is overlapping the walls and map looks very bad. We have tried all the launch files in b1_navigation of qre_b1. and none of this works properly. (mapless_movebase completes its goal when the goal is very near but when the goal is far it is not working as it should.) Will you please assist me to make a smooth navigation for b1 please.

Dear Bishu,

Are you using gmapping, if so I recommend switching to SLAM toolbox.@Azib will send you a pre-configured one for a different robot that you can adapt into the B1.

Also if the area looks exactly the same in all directions teleportation may occur for which you need to choose a height of the lidar that captures the most features of the environment.

Please configure the deadzone of the 3D Lidar correctly (in the lidar driver) so that it doesn’t detect the arm. This will cause an issue in map_navigation.

Yes, we will try and help though the coming weeks will be busy as I will be on a business trip!

Hello Sohail,

I managed to erase the deadzone of the lidar so now it can only see at the front. I don’t understand when you mention about not using gmapping but using slam-toolbox instead? I was waiting for any pre configured toolbox that you mention, I haven’t received that yet. However, I have tried installing slam-toolbox and used mapper params online async. but when using that it only generates map from it’s first scan then the map is not updating.

Could you please mention what are the launch files you launched when you use gmapping_demo.launch in qre_b1 package? I run the lidar launch files and two static publisher as below:

rosrun tf static_transform_publisher 0 0 0 0 0 0 base map 100

rosrun tf static_transform_publisher 0 0 0 0 0 0 base rslidar 100

I can see the map being created but it is detecting the same thing multiple times in different coordinates. so it looks very messy.

Do you think this rotation is something with the wrong frame configuration? For your information, movebase_mapless_demo.launch seems to work fine when we give some shorter goals using 2dnav goal by RVIZ. however it complains about not able to produce the path sometimes so we want to add a nice map to enable smoother navigation.

Lastly, if you recommend using other ways of mapping, could you also mention how we can configure correctly with the existing amcl_demo.launch in qre_b1 package or any possible navigation that you’d recommend?

To generate a map with the SLAM toolbox, you need to move the robot. For a highly accurate and detailed map, we recommend moving the robot slowly.

Regarding the other issues that you are facing with SLAM, I suggest checking out some tutorials on SLAM navigation. Additionally, our documentation on SLAM might be helpful.

If you are still having trouble understanding SLAM navigation, we offer training on navigation and ROS1. You can view the curriculum for our training program here.

Hello,

We have managed to create better map by playing around with robosense sdk configuration. We have better navigation system now. Thanks a lot for your help.